OpenAI announced the release of a new member of the GPT family today, InstructGPT-3.5-Turbo. We heard the news via the email below:

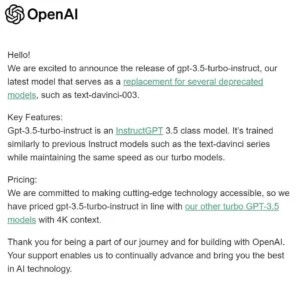

Hello!

We are excited to announce the release of gpt-3.5-turbo-instruct, our latest model that serves as a replacement for several deprecated models, such astext-davinci-003.

Key Features: Gpt-3.5-turbo-instruct is an InstructGPT 3.5 class model. It’s trained similarly to previous Instruct models such as the text-davinci series while maintaining the same speed as our turbo models.

Pricing:

We are committed to making cutting-edge technology accessible, so we have priced gpt-3.5-turbo-instruct in line with our other turbo GPT-3.5models with4K context.

Thank you for being a part of our journey andfor building with OpenAl. Your support enables us to continually advance and bring you the best in Al technology.

These models follow the older style of interaction of prompts and responses, as opposed to the chat-style interaction used by ChatGPT which most people are familiar with now. Theoretically, they should be better for providing structured responses and possibly better for longer-form content generation than chat models, at the cost of simple interactivity.

You can be sure we’ll be checking it out! InstructGPT 3.5 will have the same 4096 token context window length as GPT-3.5-Turbo at the same price point. This may become the model of choice for mass text generation, leaving ChatGPT for more interactive exploration like research and education.

It’s interesting to see OpenAI introduce another GPT 3-based model with a smaller context size, as their trend has definitely been toward larger context windows and more power with GPT-4 and GPT-4-32k. Perhaps they will release an InstructGPT-3.5-turbo-16k as they did with GPT-3.5-turbo-16k. It would be a logical progression.

All of this is against the backdrop of increasing competition from Anthropic’s Claude 2 Large Language Model, with its impressive 100k token allotment. 100k tokens is a lot of context! That’s 75,000 words or a goodly sized novel!