These latest models, from OpenAI and Anthropic respectively, are insane! They can hold so much information in their context window length, adding a whole new level of length and complexity to chats or other text generation tasks. But let’s back up a second. What we’re really talking about here are generative AI models that are redefining what’s possible. If you want an AI assistant for complex tasks or a chatbot for customer interactions, GPT-4 32k and Claude 2 100k could become standard tools soon.

I’m particularly eager for GPT-4 32k, as it will be a huge boost to novice coders like myself who are learning with help from GPT and other LLMs. If Claude 2 100k holds up to its reputation, it will be a fantastic content writer for bloggers or content marketers. In fact, everyone wondering how to do online marketing or how to do affiliate marketing is going to want to lay hands on them.

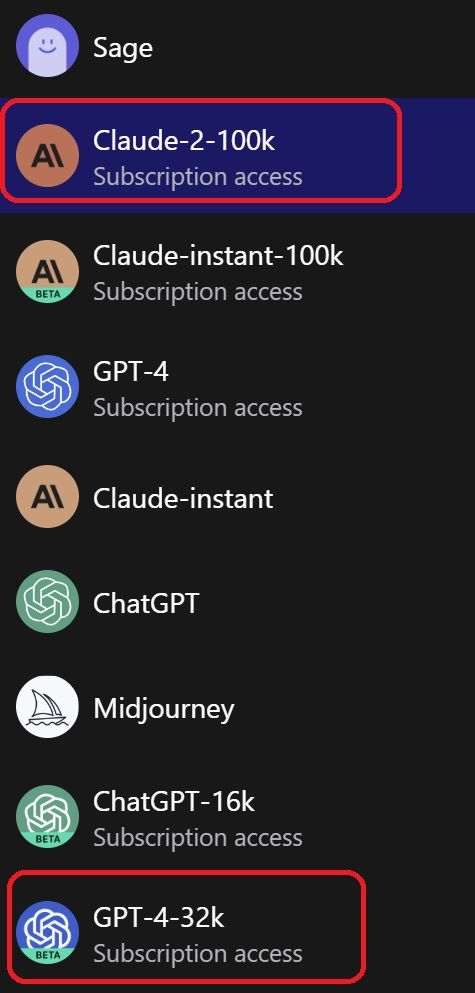

Poe.com has made them all available, so you can get access to everything in one place. It is a paid service that adds their own interface over the OpenAI (GPT-3.5-turbo, GPT-3.5-turbo-16k, GPT-4, and GPT-4-32k) or Anthropic (Claude-instant, Claude-instant 100k, and Claude-2-100k) APIs. You can also create your own chatbots from your documents, use Llama-2, and play with a number of other AI toys.

Since the publishing of this article, Claude 2 beta has been publicly released. You can chat with Claude 2 here without needing Poe. You still have to wait for GPT-4-32k unfortunately. OpenAI has announced unlimited GPT 32k for users of its new ChatGPT Enterprise service.

You can see the full model list available to The Servitor’s account here in this screenshot:

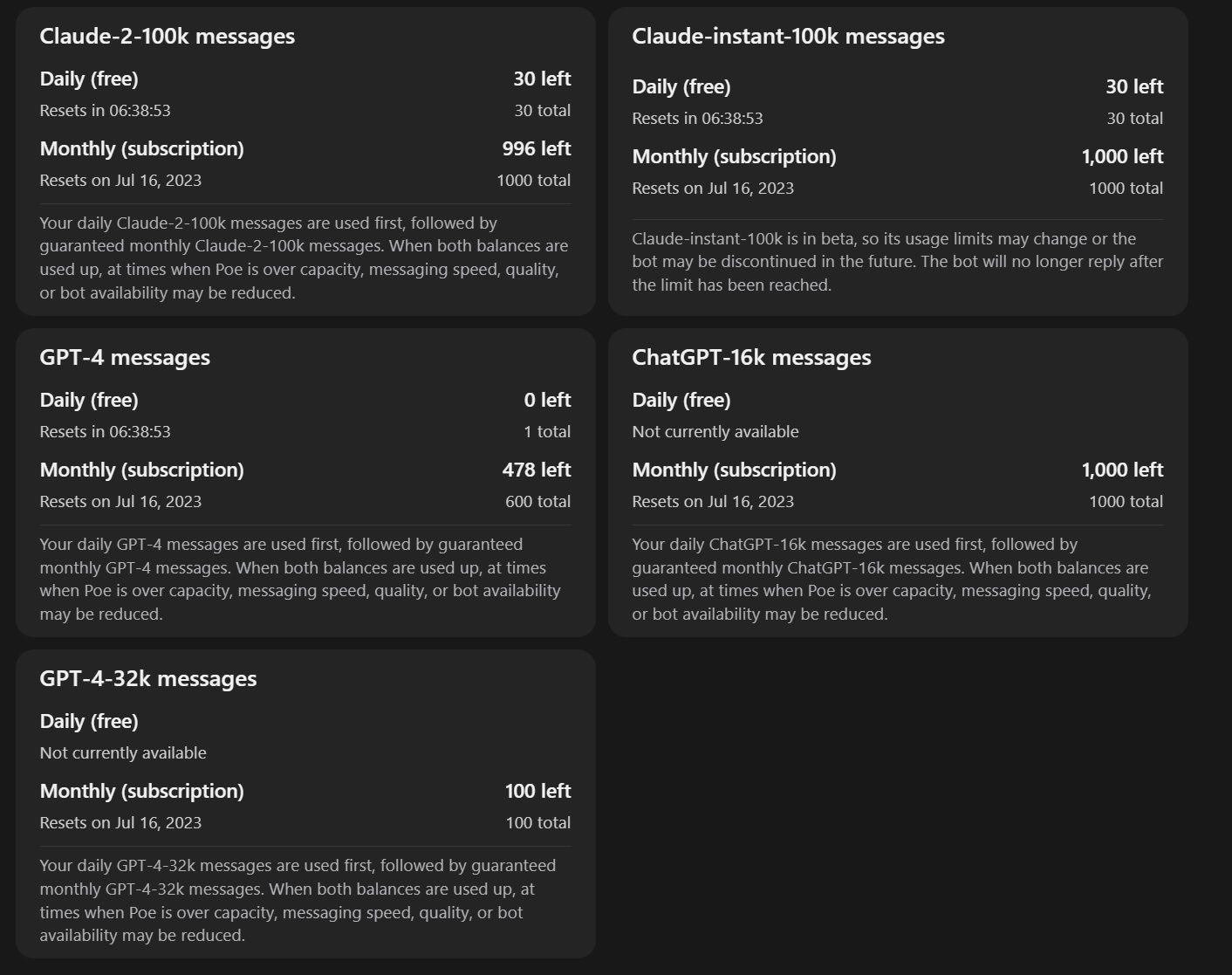

Rather than charging you by the token, as with regular API calls, Poe charges you a flat rate and provides a certain number of messages per month. you can see below the quotas:

Context and Tokens: Why Size Really Does Matter

Now, let’s get into the nitty-gritty—context and tokens. In the AI world, context is king. The more context an AI model has, the better it understands and responds. That’s why you hear people saying things like “100,000 tokens.” These tokens are like the building blocks of understanding for AI. The more you have, the clearer the picture. The 100k in “100k context” is 100,000 tokens.

Before these models, GPT-4 was already a powerhouse with an 8,192-token context window (double that of gpt-3.5-turbo). GPT-4-32k is therefore 4x the size of the original GPT-4 model. (Likewise, gpt-3.5-turbo-16k is four times the size of the original gpt-3.5-turbo). It’s like upgrading from a DIY swimming pool to an Olympic version.

Claude 2 can ingest about 75,000 words – a decent novel, a pile of legal documents, a number of research papers. I’ve done this with my own articles and stories. I can put the entirety of every article on The Servitor in a document, give it to Claude, ask an AI question, and get the correct answer. It’s so cool! This new expanded Claude is also available via the Anthropic API for API calls.