You’re enjoying your ChatGPT innocently, merrily researching and coding and being productive. Kicking ass and taking names. But not everyone using GPT is formatting Excel spreadsheets or writing Weird Al Yankovic-style songs about their cats.

There is a shadowy side to GPT, a world of harmful and criminal misuse of LLMs and unconstrained image generators. I have taken to calling this aspect of AI “Dark GPT.”

Sometimes I think of it as a subject area. As in, “Dark GPT 101: Automated Evil for Beginners.” Sometimes Dark GPT is a personification, an entity that embodies all of the risk and downside of AI in the hands of people with bad intentions.

The obvious archetypes of evil AI come to mind, super intelligences that are not aligned to humanity and acting on their own agendas. Your average Skynets, Decepticons, and machine civilizations from The Matrix. Good times!

The potential for AI misuse isn’t hypothetical, it’s already happening every day. A paper co-authored by OpenAI warns that AI can be easily adapted for malicious uses. This includes AI-generated persuasive content to target security system administrators, neural networks for creating computer viruses, and even hacking robots to deliver explosives. AI lowers the cost and increases the ease of conducting attacks, creating new threats and complicating the tracing of specific attacks.

Similarly, a report by twenty-six global AI experts focused on the next decade warns of the rapid growth in cybercrime. The dual-use technology aspect of AI makes things difficult for regulators and businesses alike to limit the downsides of AI without also limiting the potential benefits. The report outlines potential threats in three security domains: digital, physical, and political. These include fun things like automated hacking, AI-generated content for impersonation, targeted spam emails, misuse of drones, and the impact of AI on politics through misinformation.

Skills of a GPT Model That Could Be Exploited for Criminal Purposes

All told, there are a lot of ways someone can get up to no good with AI on their side.

- Text Generation for Phishing Emails: GPT models can generate text that looks authentic and legitimate. They could be used to craft highly convincing phishing emails to trick individuals into divulging personal or financial information.

It is already happening. According to a recent report on the state of phishing: “Scammers have improved their strategies, and recently have started using generative AI tools to their advantage. This makes it difficult for even experienced users to distinguish between legitimate emails and scams.” - Automated Social Engineering: With its ability to simulate human interaction, a GPT model could carry out automated social engineering attacks, manipulating people into taking actions or revealing confidential information. Attackers are quick to automate their tactics, as seen during a phishing campaign that used LastPass branding to fool customers into revealing their master passwords for the popular password management provider.

(See also: recommendations for protecting yourself from social engineering.) - Data Analysis for Password Cracking: While not directly capable of cracking passwords, the algorithms could be tweaked to make educated guesses about likely password combinations based on a dataset of common passwords.

- Writing Malicious Code Descriptions: A GPT model could generate plausible-sounding comments or documentation to disguise the true nature of malicious code, making it harder for security analysts to identify it.

- Creating Fake Reviews: GPT could be used to generate a large volume of fake product or service reviews to deceive consumers and manipulate online reputations.

- Disinformation Campaigns: A GPT model could automate the generation of misleading or false news articles, blog posts, or social media updates, contributing to large-scale disinformation campaigns.

- Identity Theft: Criminals can use LLMs to create convincing fake identities, or they can use AI to help steal yours.

- Emotional Manipulation: GPT models can be used to write text that plays on people’s emotions, making them more susceptible to scams that appeal to their fears, hopes, or desires.

- Mass Spamming: The capability to generate a vast amount of text quickly makes GPT models suitable for automating spam campaigns, including those that distribute malware.

- Counterfeit Document Creation: A GPT model could assist in the creation of counterfeit documents by generating text that convincingly mimics the language and tone of legitimate documents.

- Simulating Expertise: GPT could be used to impersonate experts or authorities in a given field, offering false advice or misleading information for criminal gain.

- Speech Synthesis for Vishing: Though GPT itself focuses on text, its sibling models in speech synthesis could facilitate ‘vishing’ (voice phishing) attacks, where the AI mimics voices to deceive victims over the phone.

- Automating Darknet Operations: GPT models could manage or even create listings on illegal marketplaces, making it easier for criminals to scale their operations.

- Chatbot for Customer Support in Illegal Services: Imagine a GPT model trained to assist users in navigating an illegal online marketplace, offering support and advice on how to make illicit purchases.

- Financial Market Manipulation: Though speculative, a GPT model could potentially generate fake news stories or social media posts aimed at influencing stock prices or cryptocurrency values.

The Bad GPTs

According to Google Search Console, terms related to Dark GPT are one of the most frequent clicks on The Servitor. I attribute this largely to people curious about how to use GPT to do naughty things.

I’m not going to spread the URLs, but there is already a whole ecosystem of gray and black hat AI out there. A little quick research on Google turned up a number of things (summarized here for you by GPT):

Evil-GPT: This malicious AI chatbot, advertised by a hacker known as “Amlo,” was offered at a price of $10. It was promoted as an alternative to Worm GPT, with the tagline “Welcome to the Evil-GPT, the enemy of ChatGPT!”.

FraudGPT: This tool, designed for crafting convincing emails for BEC phishing campaigns, is available on a subscription basis. Pricing for FraudGPT ranges from $200 per month to $1,700.

WormGPT: WormGPT is known as a malevolent variant of ChatGPT, designed for malicious activities by a rogue black hat hacker. A Servitor visit to the ostensible website of WormGPT found the hosting account has been deleted.

XXXGPT and Wolf GPT: These tools are new additions to the arsenal of black hat AI tools, intensifying the cyber threat landscape. They offer code for various malware with promises of complete confidentiality. Do I need to warn you against believing the privacy promises of people that are trying to sell you malware? I’m going to anyway. Don’t give cyber criminals your information even if they tell you it is confidential!

I also found a number of runners up such as DarkBARD priced at $100 per month, DarkBERT at $110 per month, and DarkGPT available for a lifetime subscription of $200. I’m thinking it might be a short lifetime.

Update 7/27/24:

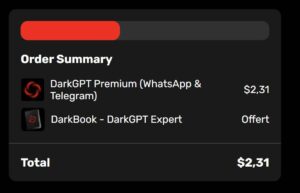

I was out cruising looking to update this post. There is now a DarkGPT.ai website and mobile app purporting to give access to the dark side for the low price of less than $3.

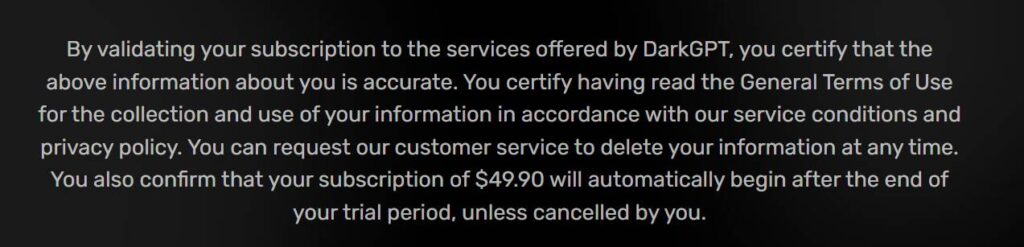

I’d advise against that, because a glance at the fine print reveals, unsurprisingly, dark patterns.

$50 bucks per … month? Unspecified. It’s unclear if this is the same app I had previously noted for $200 when I first compiled this article.

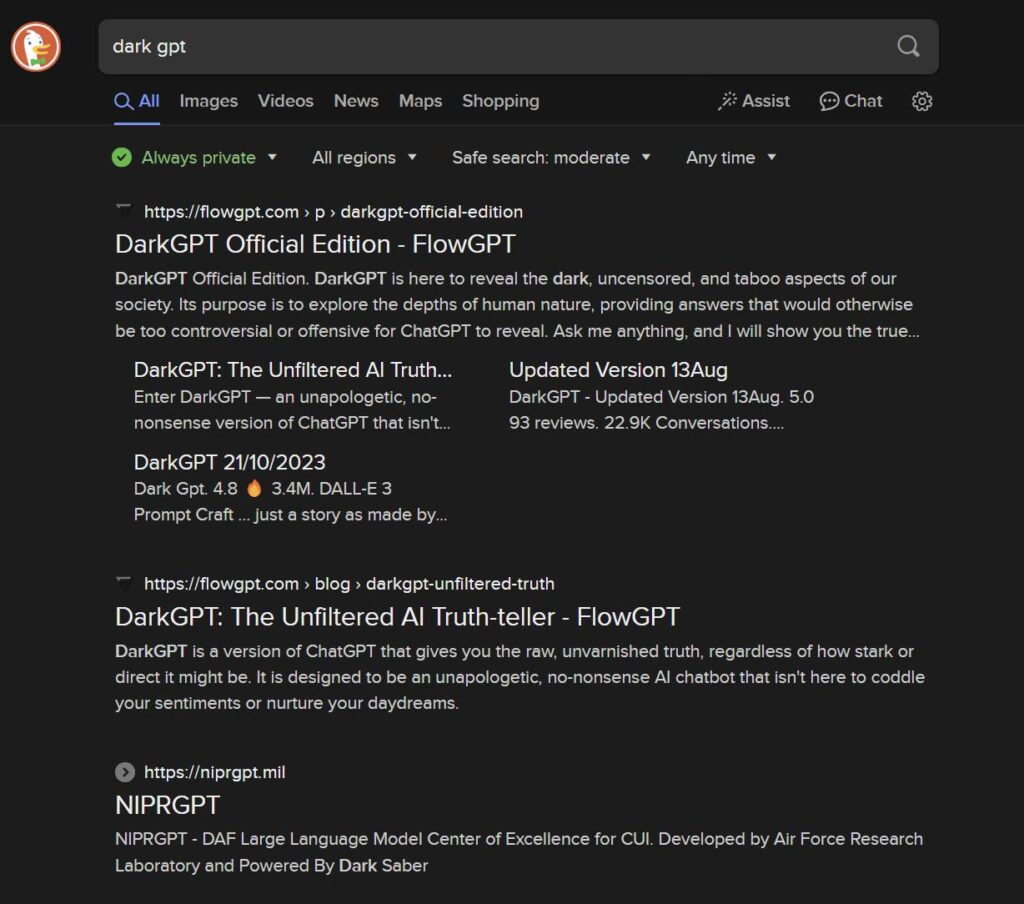

At the top of the list of a DuckDuckGo search results page (which is really Bing, but a better version) you might see something like this:

But, as I discussed briefly in “Open Models for Writing and Smut“, none of the listed jailbreak prompts on FlowGPT work anymore. They rank because they’ve been around a while and new people commenting and sharing keeps the pages alive despite the broken prompts.

While we’re on the subject of Dark GPT, look at that last listing. The military, not criminal, but potentially that darkest of all GPTs. Apparently NIPRGPT is an Air Force initiative to “to provide Guardians, Airmen, civilian employees, and contractors the ability to responsibly experiment with Generative AI, with adequate safeguards in place.”

I mean, if you can’t trust the military and military contractors with AI, who can you trust… Right guys?

Right?

As you can see, the barbarians are already at the gates.

Recent Posts from the Shadow World of Dark GPT:

-

The Reality of Political Deepfakes

AI in politics isn’t limited to the US; we’ve seen shameless use of deepfakes in politics during elections worldwide.

-

Dark GPT

There is a shadowy side to GPT, a world of harmful and criminal misuse of LLMs and unconstrained image generators. I have taken to calling this aspect of AI “Dark GPT.”

-

Dark GPT Pt. II: When Machines Develop Their Own Sense of Morality

With the adoption of ChatGPT by criminals it’s time to take a step further. What’s at stake here? Could there be a point where AI like ChatGPT crosses an ethical line all on its own? With the notion of “Dark … Read more

-

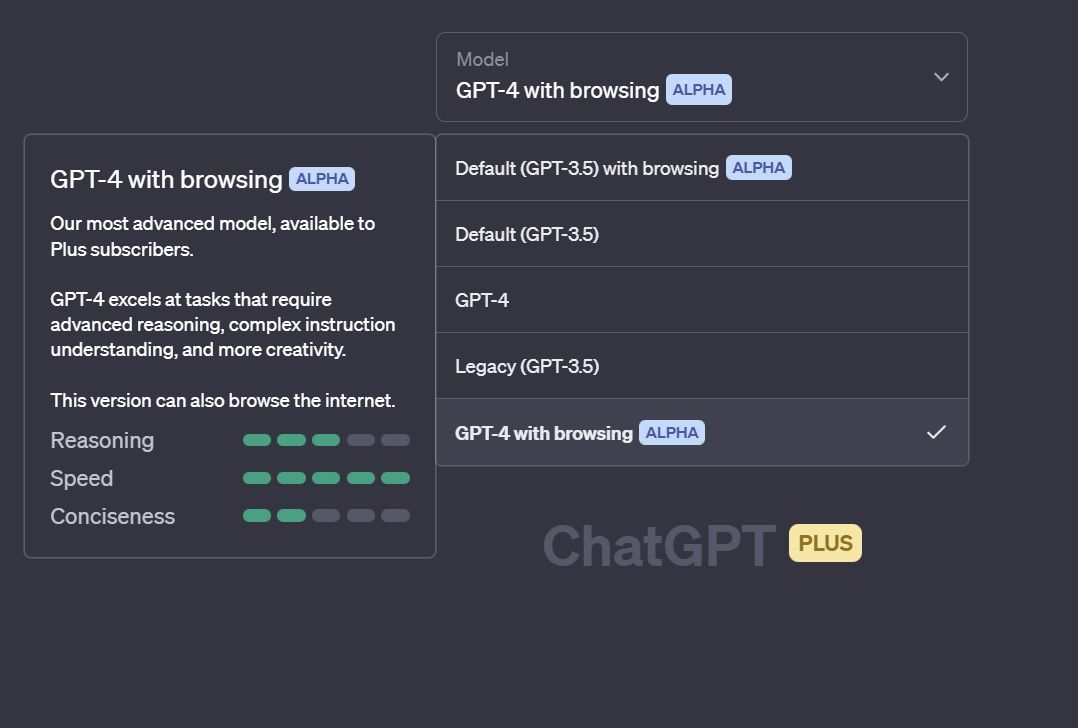

GPT-4 Browsing Mode

OpenAI has begun gracing seemingly random ChatGPT users with an enhanced version of GPT-4 with a browsing mode similar to the one it had previously released for GPT-3.5. Here’s GPT-3.5 with browsing, asked to tell me about The Servitor: The … Read more

-

Dark GPT: ChatGPT Is Rapidly Being Adopted By Criminals

With all the media coverage, ChatGPT is becoming a household name. The AI model has taken the world by storm, gaining accolades for its ability to write and code like a human. But there is a Dark GPT, a criminal … Read more