Increasingly, nonprofit organizations are turning to AIs like openAI’s GPT-4 to help streamline their fundraising efforts. Like nearly every other sector right now, philanthropy is feeling the impact of AI on tasks generally performed by white-collar workers.

While AI can be an incredibly useful tool, it’s crucial for nonprofits to be aware of the potential concerns associated with its use. Here is an overview of various legal, ethical, and transparency/accountability issues that non-profits should consider when using AI to fundraise.

Legal Concerns

Compliance with regulations is a huge part of running a successful 501(c)3 operation. For example, they must comply with data protection and privacy laws; like the General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) in the United States. These regulations outline specific requirements for handling personal data and ensuring donor privacy. As nonprofits collect and process donor data with AI assistance, they need to keep them in mind.

It’s also important for nonprofits to manage donor data securely and responsibly, including implementing robust data security measures and limiting data access to authorized personnel. This responsibility extends to the AI systems they use in fundraising, which should be designed to protect sensitive donor information.

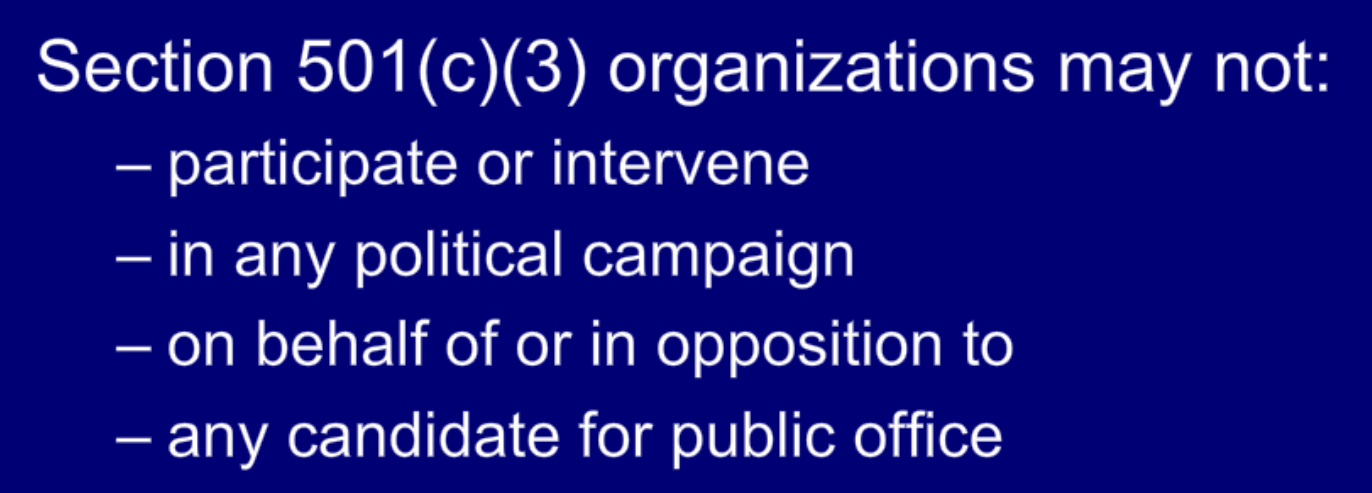

Ban On Political Activity

Perhaps the biggest potential stumbling block for charities working with AI is the ban on political activity. Nonprofits classified as 501(c)(3) organizations in the United States are strictly prohibited from engaging in political campaign activity. Violating this ban can result in severe penalties, including the loss of tax-exempt status.

It is an understatement to say that many matters are politically charged in the current environment. A “hallucination” by an AI that somehow causes it to violate this ban in an organization’s name could mean non-artificial trouble. For this reason, it’s very important nonprofits using AI in fundraising ensure that their activities maintain a non-partisan approach to political issues. This means that AI systems should be designed and programmed to avoid favoring or opposing any political candidate, party, or campaign.

Inviting Political Candidates To Speak At Events

When non-profits invite political candidates to speak at their events, they must ensure that all candidates are given equal opportunities to participate. This means that invitations should be extended to all candidates running for a particular office, regardless of their political affiliations or viewpoints.

During such events, nonprofits should remain neutral and avoid expressing support or opposition for any specific candidate. This includes not only the organization’s official stance but also any potential biases in the AI-driven content, such as social media posts, email communications, and event promotions.

Nonprofits must be vigilant in ensuring that no political fundraising occurs during these events. This means that they should avoid soliciting or accepting any political contributions on behalf of, or in opposition to, any candidate or political party.

In some cases, nonprofits may wish to invite political candidates to speak at their events in a non-candidate capacity, such as when the candidate has expertise on a particular issue relevant to the organization’s mission. In such instances, nonprofits should make it clear that the invitation is not related to the individual’s candidacy and take steps to ensure the event remains non-partisan.

Inadvertent Training Biases

AI algorithms are only as good as the data they’re trained on. If the training data is biased, it can lead to biased fundraising practices.

Imagine a non-profit organization that aims to raise awareness and funds for a specific health condition. The condition affects both men and women equally. The AI system is trained on historical donor data, which reveals that the majority of past donors are women. If the AI uses this data without considering potential biases, it may develop a fundraising strategy that targets mainly women, thus neglecting a significant portion of the affected population – men. This biased approach could inadvertently reinforce gender stereotypes and limit the organization’s reach and impact.

Or consider an environmental non-profit that works to protect natural habitats and endangered species. The organization uses AI to identify areas where their conservation efforts should be focused. The AI system is trained on data that primarily includes successful past projects in regions with strong local support and substantial media coverage. Consequently, the AI may prioritize projects in areas where success is more easily achievable and visible, while overlooking regions that might face greater ecological threats but lack robust local support or media attention.

In both examples, the non-profits could unintentionally exclude specific groups, potential donors, or overlook pressing issues due to biases in their AI systems. Organizations need to be aware of these potential biases and take steps to address them by understanding the dataset their AI is working from.

Transparency And Accountability

Transparency is key to building trust with donors and stakeholders. Non-profits should openly communicate their use of AI in fundraising activities, as well as the steps they’re taking to ensure legal and ethical compliance. This transparency can help foster a sense of trust and demonstrate the organization’s commitment to responsible AI use.

By proactively addressing potential concerns related to AI in fundraising, nonprofits can help assuage any fears or reservations donors and stakeholders may have. This might include discussing the organization’s data privacy policies, outlining efforts to eliminate bias, and highlighting the ethical guidelines governing AI use.

Balancing Benefits Of AI With Risks

AI offers numerous benefits to non-profits, such as improved efficiency and the ability to tailor fundraising appeals to specific donors. However, it is essential to balance these advantages with the potential risks and concerns associated with AI use. Nonprofits should conduct thorough assessments of their AI systems, implement safeguards to mitigate potential issues, and remain vigilant in monitoring AI-driven activities to ensure they align with legal and ethical guidelines.

Ultimately, by addressing the legal, ethical, and transparency/accountability concerns related to AI use, nonprofits can successfully harness the power of AI to further their missions while maintaining the trust and support of their donors and stakeholders. As AI continues to evolve, it is crucial for nonprofits to stay informed about emerging best practices and to adapt their strategies accordingly to ensure they remain in compliance with all relevant laws and regulations.