With material by Gina Gin and Daniel Detlaf – Updated 9/16/2024

recapping Asilomar

If you’ve been following events in AI since at least the dawn of ChatGPT, you might remember a big stir early in 2023 caused by an open letter calling for a pause in the development of AI following the release of GPT-4.

The letter was grounded in a set of ideas called the Asilomar Principles — named for the place in California where the 2017 Conference on Beneficial AI was held.

The Asilomar Principles are a set of 23 principles meant to embody a vision for AI that will benefit all of humanity. They were chosen in a process of drafting and refinement that ended with the 23 principles each of which received support from at least 90% of the conference participants.

They are broadly broken down into three categories: Research Issues, Ethics and Values, and Longer-Term Issues.

Research Issues

The Research Issues section of the Asilomar AI Principles starts off the principles with a main premise: The point of AI research shouldn’t be intelligence for its own sake, but beneficial intelligence. If it isn’t improving life for humanity, what is the point? Especially considering possible dangers.

It asks questions related to the process of development itself:

- How can we make future AI systems highly robust, so that they do what we want without malfunctioning or getting hacked?

- How can we grow our prosperity through automation while maintaining people’s resources and purpose?

- How can we update our legal systems to be more fair and efficient, to keep pace with AI, and to manage the risks associated with AI?

- What set of values should AI be aligned with, and what legal and ethical status should it have?

Ethics and Values

The Ethics and Values section is exactly that. It stresses that AI systems should be designed to align with human values, ensuring fairness, transparency, and respect for individual rights. The principles call for the avoidance of biases, the protection of privacy, and the prioritization of well-being and autonomy. Both the benefits and the risks of AI should be evenly distributed across societies.

The attendees also make the following massive understatement:

AI Arms Race: An arms race in lethal autonomous weapons should be avoided.

Longer-Term Issues

The Longer-Term Issues section has eyes on the potential future impacts of advanced AI on society. It calls for considering the long-term consequences of AI development NOW, before it is too late, including the possibility of achieving human-level AI and beyond.

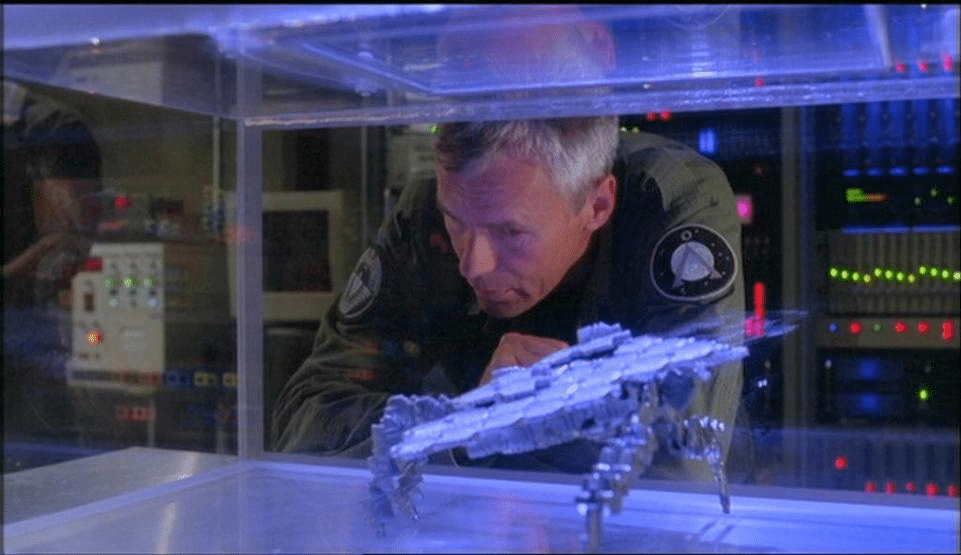

It is strongly suggested we should limit ourselves for our own good. We don’t want to unleash the Replicators. This is the “gray goo” or “paperclip planet” scenario.

Recursive Self-Improvement: AI systems designed to recursively self-improve or self-replicate in a manner that could lead to rapidly increasing quality or quantity must be subject to strict safety and control measures.

7 Years After Asilomar, 1 Since the Letter

So are we following the principles?

No. Not really. And there was certainly no pause in development.

In fact, Elon Musk, one of the signatories, seems to be hellbent on training the biggest baddest LLM of them all using a new supercomputer containing 100,000 Nvidia GPUs. Considering he also prides himself on the uncensored nature of his Grok AI, I’d say all safety concerns are forgotten.

OpenAI has released its new reasoning model gpt-o1, which it claims represents an advance in the use of AI to tackle complex multi-step problems like those found in science and coding. It also, coincidentally, is the first time they have rated a mode as a “moderate” risk for increasing the risk that AI will be used for bioweapons development. NBD.

Meta, of all companies, has arguably been doing the most for open and transparent LLM research with their Llama series of models, but they are widely held to be less performant than the private (and often paid) models that dominate right now like GPT and Anthropic’s Claude 3.

Meanwhile, OpenAI quietly amended their policies to allow cooperation with the military.

Palantir, the surveillance and military data broker that is up in everyone’s business, held a conference on AI in the military. Turns out the billionaire’s just want peace. Through superior firepower.

Original Post

What Are The Asilomar Principles? A Look At The Framework For AI Ethics And Safety

Let’s talk about the concepts behind the open letter calling for a pause in AI development that is making headlines today: the Asilomar AI Principles. These principles offer a framework for the ethical development of artificial intelligence and have recently been brought up in connection with an open letter signed by various tech leaders, including Elon Musk.

So, what exactly are the Asilomar AI Principles?

In a nutshell, they’re a set of 23 guidelines created during the 2017 Asilomar Conference on Beneficial AI. These principles aim to promote the safe and responsible development of AI technologies, focusing on areas like research, ethics, values, and long-term safety. The overarching goal is to ensure that AI systems are developed in a way that benefits humanity as a whole.

Some key points among the principles include the importance of:

- Broadly distributed benefits: AI technologies should be designed and developed to benefit all of humanity, avoiding uses that could harm humanity or concentrate power unduly.

- Long-term safety: AI developers should prioritize research that ensures AI safety and work together to address global challenges, even if it means sharing safety and security research.

- Value alignment: AI systems should be aligned with human values, and their creators should strive to avoid enabling uses that could compromise these values or violate human rights.

These principles are particularly relevant today, given the rapid advancements in AI technology and the potential risks they pose. The Asilomar AI Principles provide a valuable foundation for the dialogue on how to move forward with AI development.