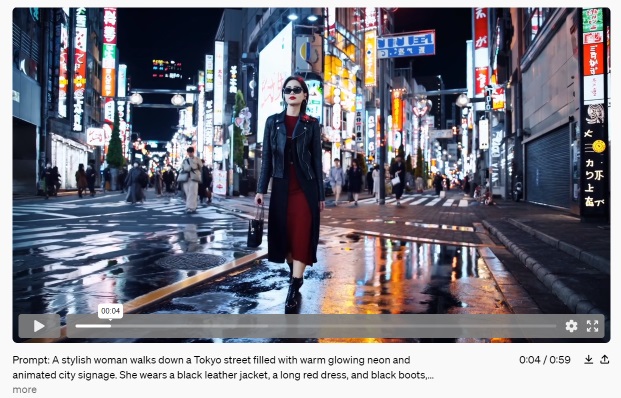

OpenAI has released a new text-to-video AI model called Sora. Sora is a video generation model that uses natural language prompts to create complex video scenes.

Sora can generate complex video scenes from text prompts. It is able to generate scenes with multiple characters, specific types of motion, and accurate details of the subject and background, based on the input text prompt.

OpenAI claims that Sora has a solid understanding of how objects work in relation to one another. essentially modeling the world it creates in. I’m skeptical about the claims that it is a “world model” however. But the videos are still fascinating. It’s easy to see the potential, and they are a LONG way from the few-second clips being generated last year.

Sora is part of OpenAI’s mission to develop and release increasingly powerful AI models, while also taking steps to ensure their “responsible” use. Sora is being released to red teamers for evaluation, as well as to visual artists, designers, and filmmakers to gain feedback on how to improve the model for creative professionals.

OpenAI sees Sora’s limited release as part of their commitment to engaging with a broad range of stakeholders and the public in the development and deployment of AI technologies. By sharing their research progress and working with people outside OpenAI, they hope to give the public a sense of what AI capabilities are on the horizon and gather feedback on how to make AI technology safe and beneficial for everyone. You can read OpenAI’s full announcement on the release of Sora on their website.