One of the great things about LLMs if you know or are willing to learn a little coding is their ability to string together all the little parameters and modifiers in a given software library.

“Coding” is Often Reading the Instruction Manuals for Tools

If you don’t code, let me explain briefly. Coding is about problem solving, and often the problems that come up are repetitive. A lot of problems have perfectly good solutions already publicly available. So in practice, programmers often reuse code by loading packages of pre-made code called libraries, modules, or various other names depending on the language and context.

These libraries are basically toolkits. You don’t have to build your own power saw, you just need to grab it from your toolkit, read the instructions, plug it in, and start cutting.

The instructions on these software tools can be lengthy and complex, however. If they have good documentation they aren’t hard to work out. But “not hard” and simple or quick are not the same.

Imagine if before using your power saw, you first have to define the radius of the blade, the speed of the motor in RPMs, the angle of the blade, etc. It’s all stuff you can figure out with the manual but it takes some thought and time.

Same with software libraries. It’s all there, pre-made, instructions provided, but some configuration is needed to put it to work. LLMs can speed that process up, because it knows all the different settings, what they do, how they affect each other, and what to set them at to achieve certain goals.

Creating the GIF with GPT-4o and Python

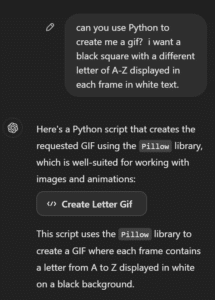

I wanted to make a GIF of the alphabet flashing in a cycle for a small side project. I know that Pillow, a Python package full of code for image manipulation, can probably do it. And that’s about all you need to know – whether the tools and code exist and are common enough that GPT, Claude, Llama et al might have been trained on them.

After typing in a straightforward prompt asking GPT to create a script using Python to cycle through the alphabet in GIF form, it was done. GPT has the library’s handbook memorized and can provide answers faster than I can scroll through the docs.

Below you can find the source code and the end result. If you’re following along at home and have Python involved, you can save it to a file and run it with “python yourfilename.py”. You might need to install Pillow first (pip install Pillow).

from PIL import Image, ImageDraw, ImageFont

import string

# Constants for the GIF

width, height = 200, 200 # Size of each frame in pixels

bg_color = "black" # Background color

text_color = "white" # Text color

font_size = 200 # Font size for the letters

frame_duration = 400 # Duration of each frame in milliseconds

output_gif = "letters.gif"

# Create a list to store each frame

frames = []

# Load a default font

try:

font = ImageFont.truetype("arial.ttf", font_size)

except IOError:

font = ImageFont.load_default()

# Create frames for each letter from A to Z

for letter in string.ascii_uppercase:

# Create a new image with a black background

img = Image.new("RGB", (width, height), bg_color)

draw = ImageDraw.Draw(img)

# Get the size of the text to center it

text_width, text_height = draw.textbbox((0, 0), letter, font=font)[2:4]

text_x = (width - text_width) / 2

text_y = (height - text_height) / 2

# Draw the letter in white

draw.text((text_x, text_y), letter, font=font, fill=text_color)

# Append the frame to the list

frames.append(img)

# Save the frames as an animated GIF

frames[0].save(output_gif, save_all=True, append_images=frames[1:], duration=frame_duration, loop=0)

print(f"GIF saved as {output_gif}")

Sidenote: Canvas and Artifacts

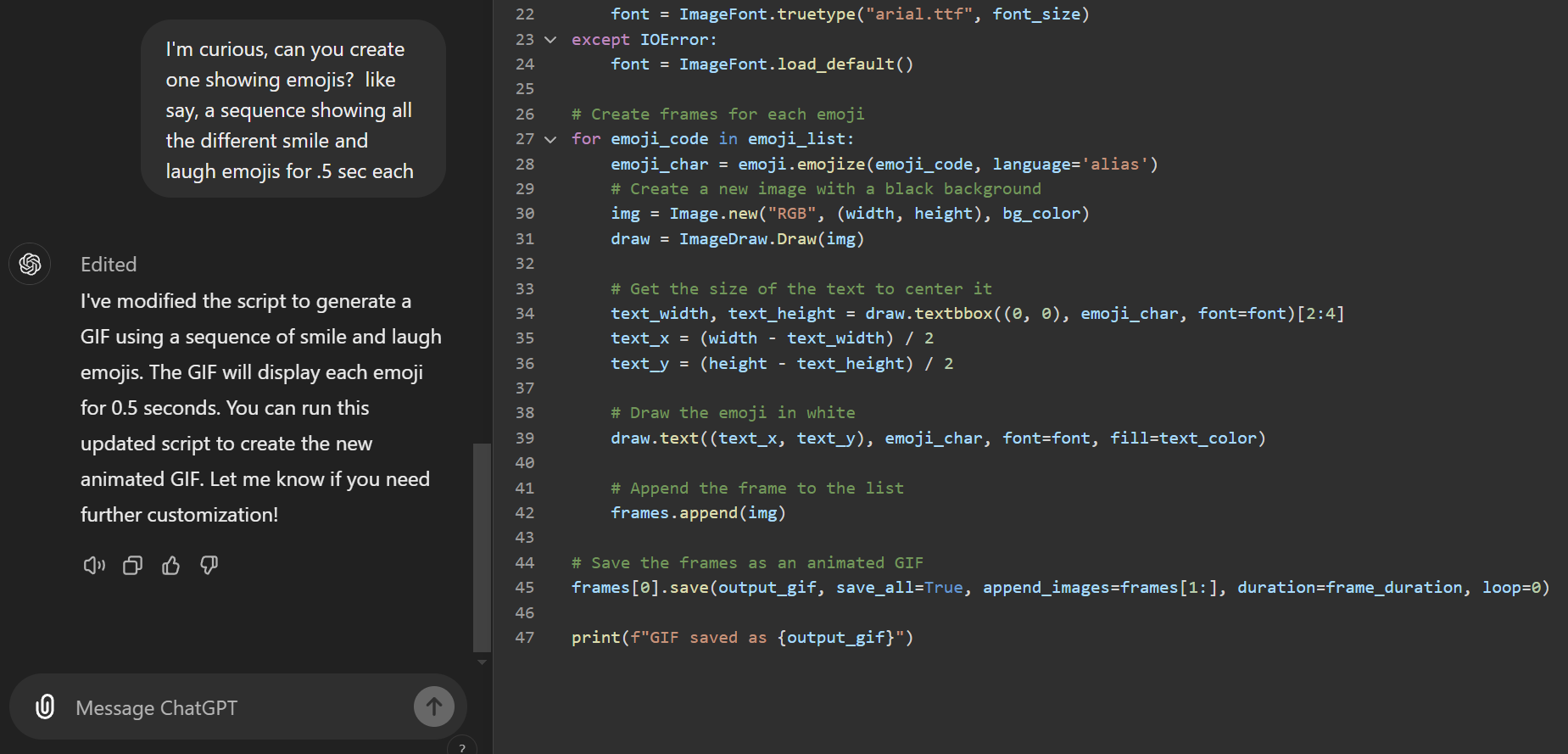

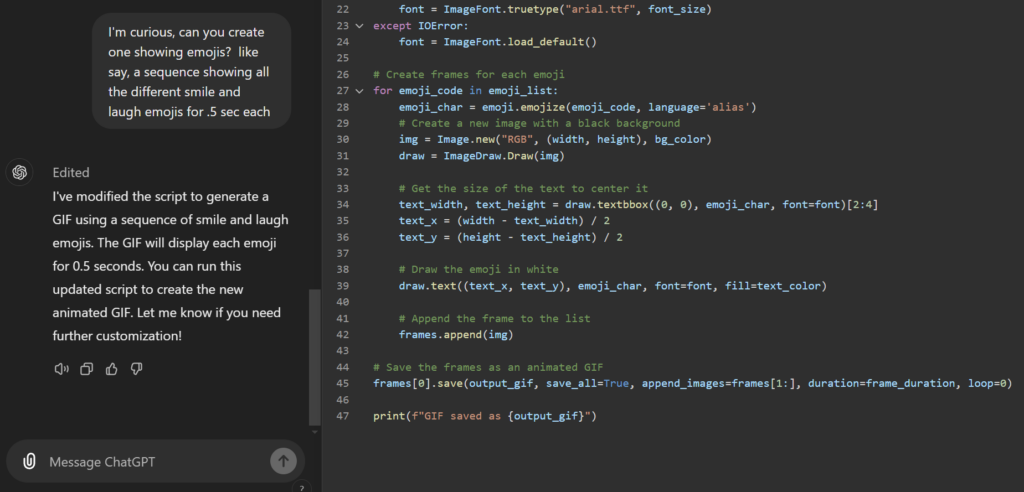

While we’re at it, let me briefly mention two new AI interfaces: OpenAI’s Canvas and Anthropic’s Artifacts. Both offer an improved way of interacting with the AIs when working on files. You can see the canvas open in the feature image for this post.

Canvas is akin to a digital workspace, an editor that opens up with the code GPT generates and then lets you type to make changes shared with GPT. When GPT makes changes to the code, it rewrites in the same window, using a versioning system to let you undo and redo, moving through versions of the file generated during your conversation.

It’s OpenAI’s response to Anthropic’s Artifacts feature that came out a few months back and offers a similar iterative workspace for working on file-based tasks. They are both great features and fun to play with.